Most CLOs have a measurement dilemma: They do not have the resources to evaluate learning’s impact, but are often required to show why learning matters.

Every other month, market intelligence firm IDC surveys Chief Learning Officer magazine’s Business Intelligence Board (BIB) on a variety of topics to gauge the issues, opportunities and attitudes that are important to senior learning executives. For the last several years, members of the BIB have been asked to provide annual insight into their outlook for the year ahead. This month IDC compares how CLOs feel about learning measurement in 2013 to their outlook in recent years.

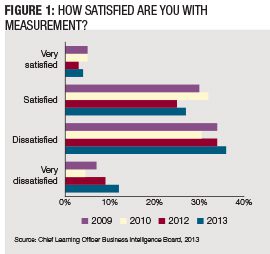

As organizations slowly recover from the economic crisis during which they were repeatedly asked to justify spending, about half of CLOs report their learning and development measurement programs are fully aligned with learning strategy. This reflects a similar alignment with last year’s results, suggesting a period of stability in learning measurement. In a small sign of retreat, a few more CLOs are dissatisfied with their organizational approach to measurement than in 2012, but signs of progress are more evident.

Generally, measurement collection and reporting can be described as basic. Three-quarters of enterprises use general learning output data — courses, students, hours of training — to help justify learning’s impact. Two-thirds go further and report learning output aligned with specific learning initiatives. Measurement of stakeholder and learner satisfaction with learning programs is also common. But more advanced measurement application such as monitoring employee engagement, employee performance or business impact is still constrained by time, resources and support.

Taking Measures

Learning professionals mostly agree on the value of measurement. When done properly, it can demonstrate learning’s impact on the company’s top and bottom lines. Effectively measuring impact also ensures greater participation in learning initiatives, which helps the learning organization set the stage for greater relevance: “There’s an urgent need for our business to measure and understand how learning contributes to meeting our corporate goals and objectives. We’ll need to make a clear distinction between return on expectation and return on investment, with the latter being more readily achievable with realistic targets in place,” said one survey respondent.

Key metrics may include employee performance, speed to proficiency, customer satisfaction and improved sales numbers. Learning leaders still have trouble accessing key metrics and finding the time and resources to conduct measurement.

In 2008, a majority of enterprises reported a high level of dissatisfaction with the learning measurement in their organizations. By 2010, that feeling had moderated substantially. However, in 2013, dissatisfaction increased again — perhaps because of higher expectations and a continuing challenge to fully leverage tools, staff projects and effectively influence leaders (Figure 1).

As some CLOs report:

“We have SO much data, but no idea what to do with it all!”

“We have covered the elemental aspects of measurement and are working to master the analysis of the data that are being produced.”

“Good efforts are made within data limitations.”

“Our systems don’t connect with one another, which makes it hard to look at things from an enterprise-wide point of view.”

The relationship between the learning organization’s role within the enterprise and its measurement capability is stark. When organizations are very satisfied with the approach to measurement, they also feel learning and development play a very important role in achieving organizational priorities. On the other hand, when the measurement programs are weak, most CLOs report their influence and role in helping achieve organizational priorities is also weak. Learning organizations that can demonstrate their impact can expect to have a greater impact on their organization when needed.

Compared to prior years, the common forces working against satisfaction with

measurement programs remained a combination of capability and support. Specifically, CLOs believe their ability to deploy effective measurement process is limited by a lack of resources, an inability to bring data together from different functions, a lack of management support and a lack of funding.

Resources and leadership support are essential to develop an effective measurement program. But getting that support is challenging. One respondent illustrated the link between executive support and the learning organization’s ability: “I need to be able to have the time and resources to measure results. Currently, there is no corporate support for my objectives to make us a stronger training organization, which I believe would make us more profitable, through better retention and effective application of monetary and human resources.”

Others report measurement programs are not as far along as they hope because organizations don’t have the skills to leverage available data: “It is often very difficult to get data and resources to ‘triangulate’ on actual performance impact.” For the past several years, technology has been a barrier, but that has dropped to the least-cited reason, representing the feelings of slightly more than 10 percent of CLOs. What has grown in importance is an inability to bring necessary data together. A combination of the inability to generate impactful measures and the executives’ unwillingness to participate and leverage data creates a difficult barrier to cross.

With the various forces aligned to retard advancing measurement, there has been little change in its use. Consistent with past results, about 80 percent of companies do some form of measurement — with half of those using a manual process and half using some form of automated system.

The mix of collection approaches is similar to how it was performed as far back as 2008. There has been a slight increase in the percentage of respondents who indicate they use a manual process and a decrease in the percentage of enterprises that use LMS data predominantly (Figure 2).

Organizations report that learning platforms give them greater ability to make correlations between learning and performance, and the absence of technology can be a significant burden. “It’s still too manual, sporadic and hard work,” one respondent said.�

Correlate Learning to Outcomes

There has been an increase in the percentage of enterprises working to correlate learning to organizational change. Organizations are more frequently evaluating the impact learning has on employee satisfaction and engagement scores. Learning’s impact on customer satisfaction is also frequently measured. However, there are still areas where more work could be done. Less than half of all organizations evaluate learning’s impact on employee engagement or productivity, and less measure employee retention. Part of the reason is learning programs aren’t expected to impact quality, retention or some other metric, but there is an opportunity to increase analytics use and establish learning impact on a wider set of business outcomes. Figure 3 shows how often organizations are measuring learning’s impact on potential outcomes.

In addition to benefiting the organization as a whole, when learning is effective the benefits of measurement extend to the bottom line for the learning organization. Organizations that can consistently tie learning to specific changes are more likely to train, but they train less. They focus learning efforts on the most appropriate people and topics, abandon useless courses and spend less money and time on learning overall.

Reason for Optimism

Despite the challenges, organizations are making progress. While 35 percent of CLOs are satisfied with the extent of measurement at their companies, nearly 80 percent are planning to increase their efforts, compared to 50 percent who reported intentions to increase measurement in 2010.

Thirty percent of CLOs report they will develop measurement programs to track learning’s impact on employee capability — equal to about half of the organizations that don’t currently measure it. About half of enterprises that don’t already will begin to track the impact on employee engagement. Measuring learning’s impact on customer satisfaction, overall business performance and formal return on investment is also on the agenda for many CLOs, but learning’s impact on sales is still somewhat ambitious for CLOs, with more than 50 percent of enterprises reporting they have no plans to add that to their measurement repertoire.

CLOs can take several steps to demonstrate learning’s impact:

• Establish metrics at the project or business unit level. While it may be tempting to “swing for the fences” and demonstrate learning’s value at the enterprise level, successful measurement programs typically start off as smaller initiatives that focus on projects or at business unit level. When working with smaller groups, there are typically fewer obstacles to interfere in the measurement process.

• Define success early. Instead of backing into a measurement program, define measurement objectives in advance. By defining with stakeholders what success will look like upfront, learning professionals can more easily identify and benchmark key metrics for measurement before learning is delivered, and make post-learning results easier to evaluate.

• Set expectations upfront with stakeholders. Help stakeholders who are interested in measurement to understand the commitment required to see assessment projects through to the end. That will eliminate some resistance during the measurement phase, and with pre-defined measures, engaged stakeholders can help align necessary support from other parts of the organization.

Companies that incorporate these guidelines into their assessment methodology should see a marked improvement in the success and relevance of their measurement initiatives and learning overall.

Cushing Anderson is program director for learning services at market intelligence firm IDC. He can be reached at editor@CLOmedia.com.