CLOs are regularly asked to demonstrate the value of training, but they often don’t have access to the resources or data to properly establish it. And they admit they need help.

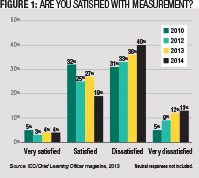

About 3 out of 4 CLOs report their learning and development measurement programs need to be better. Perhaps worse, less than half of enterprises report their measurement program is fully aligned with their learning strategy. To cap off the bad news, this year more CLOs are dissatisfied with their organizational approach to measurement, continuing a trend over the past two years.

Every other month, market intelligence firm International Data Corp., where the author works, surveys Chief Learning Officer magazine’s Business Intelligence Board on an array of topics to gauge the issues, opportunities and attitudes that make up the role of a senior learning executive. For this article, more than 230 CLOs shared their thoughts on learning measurement.

Taking Proper Measures

Generally, measurement collection and reporting can be described as basic. About 4 out of 5 enterprises use general training output data — courses, students, hours of training — to help justify training impact. Measuring stakeholder and learner satisfaction with training programs is completed about 2 out of 3 times. Only about half go further and report training output aligned with specific learning initiatives. More advanced application of measurement like employee engagement, employee performance or business impact is still constrained by time, resources and a lack of a solid measurement structure and is measured by half or fewer enterprises.

Learning professionals generally agree that when done properly, measurement can demonstrate training’s impact to the company’s top and bottom lines. Effective measurement also ensures organizational initiatives will more frequently include appropriate learning components. This helps the learning organization set the stage for increases in relevance. As one CLO reports, “As training professionals, it is important to understand whether the training provided is well-received by the stakeholders and most importantly the learner.” Another CLO is more direct: “Alignment to business goals gives relevance to the training, which will make the training more impactful.”

Learning professionals generally agree that when done properly, measurement can demonstrate training’s impact to the company’s top and bottom lines. Effective measurement also ensures organizational initiatives will more frequently include appropriate learning components. This helps the learning organization set the stage for increases in relevance. As one CLO reports, “As training professionals, it is important to understand whether the training provided is well-received by the stakeholders and most importantly the learner.” Another CLO is more direct: “Alignment to business goals gives relevance to the training, which will make the training more impactful.”

Key metrics may include employee performance, speed to proficiency, customer satisfaction and improved sales numbers. The challenge remains in gaining access to key metrics and finding the time and resources to conduct measurement.

In 2008, a majority of enterprises reported a high level of dissatisfaction with the state of training measurement. By 2010, that feeling had moderated substantially. However, in 2013 and again this year, there has been an increase in dissatisfaction, probably resulting from higher expectations and greater availability of tools and awareness combined with a continuing challenge to properly staff projects and effectively influence leaders (Figure 1).

CLOs report:

- “We have a significant gap that we need to assess/correct.”

- “There’s no solid process, format or system for post-training measurements.”

- There’s a “lack of structured processes to create a learning strategy linked to business objectives and measurement of effectiveness and impact of learning programs.”

The distance between the learning organization’s role and its measurement capability remains wide. When organizations were very satisfied with the approach to measurement, they also felt learning and development played a very important role in achieving organizational priorities. When the measurement programs were weak, most CLOs reported their influence and role in helping to achieve organizational priorities was also weak.

CLOs believe their ability to deploy effective measurement process is limited by lack of resources, inability to bring data together from different functions and lack of management support for the initiative.

Resources and leadership support are both essential to develop an effective measurement program. But getting that support remains a challenge. One respondent said, “Many managers look at training as a check-in-the-box instead of job impact. This attitude looks at training as more of a day off.” Another agreed: “We lack knowledgeable resources, standards of measure and tools to improve our ability to measure learning effectiveness.” For some, even when satisfied with the direction or scope of their analytics program, resources are still a constraint: “We’re doing all the right things … Just need resources and budget to expand the pilot or localized excellence to more opportunities.”

Others report measurement is not as far along as they hope because the organization doesn’t have the skills to position the insight with leaders. “We gather lots of data and calculate a number of metrics, but we don’t have a strategy for consistently reporting metrics to senior leadership or providing insights to support business decisions.” Other learning leaders suggest that technology is no longer a great barrier. Appropriate measurement must involve a wide range of systems, but disparate systems and an inability to get the full data set required to get answers hold measurement efforts back.

With the various forces aligned to hinder measurement, there has been little change in its use in learning and development. Consistent with past results, about 80 percent of companies do some form of measurement, with half of those using a manual process and half using some form of automated system.

The mix of collection approaches is similar to how it was performed as far back as 2008. There has been a slight decrease in the percentage of respondents who indicate they use a manual process and a significant increase in the percentage of enterprises that use LMS data predominantly.

Organizations report that learning platforms give them greater ability to make correlations between training and performance, and the absence of technology can be a significant burden. “It’s still too manual, sporadic and hard work.”

CLOs can only use the collection systems they have, but the systems used for data collection strongly correlate to the degree of satisfaction. The source of data that causes the most dissatisfaction is learning impact data captured directly from a widely used enterprise resource planning system. None of the CLOs who report an ERP as their source for learning metrics are satisfied with the extent of training measurement within their organizations.

On the other hand, leveraging LMS data plus data from an ERP system, or leveraging manually collected data with LMS data result in greater satisfaction (Figure 2). This suggests ERP data is not specific enough to appropriately evaluate the effect of training, but that LMS data alone is also insufficient.

Things Are Looking Up

There has been a meaningful increase in the percentage of enterprises working to correlate training to organizational change. Organizations are more frequently evaluating the effect training has on employee engagement scores and retention. Roughly half of enterprises also measure training’s effect on customer satisfaction. However, while it’s improving, only about half of all organizations evaluate the effect of training on employee engagement, and less than 40 percent measure productivity and retention.

Though training programs aren’t always expected to affect quality or retention, the opportunity for analytics to establish the effect training has on a wider set of business outcomes is obvious. Figure 3 shows how often organizations measure the effect of training on potential outcomes.

Though training programs aren’t always expected to affect quality or retention, the opportunity for analytics to establish the effect training has on a wider set of business outcomes is obvious. Figure 3 shows how often organizations measure the effect of training on potential outcomes.

Measurement can affect both the broader enterprise and the bottom line for the training organization. Organizations that consistently tie training to specific changes are more likely to train, but they train less. They focus training on the most appropriate people and topics, and they often can abandon less-valuable training and spend less money overall.

Despite the obvious challenges, organizations are making progress. Some 23 percent of CLOs are satisfied with their company’s measurement, and nearly 60 percent of CLOs are planning to increase the training impact measures they undertake.

More than 30 percent of CLOs report they will develop measurement programs to track training effect on employee productivity. About 3 out of 4 enterprises that don’t already will begin to track training impact on employee engagement. Measuring training impact on customer satisfaction and overall business performance are also on many CLOs’ agendas.

CLOs can take several steps to demonstrate training impact. Three of the most significant practices are:

- Set expectations upfront with stakeholders. Help stakeholders understand the commitment required to see assessment projects through to the end. This will reduce resistance encountered during the measurement phase. Engaged stakeholders can help align necessary support from other parts of the organization.

- Define success early. By defining with stakeholders what success will look like upfront, learning professionals can more easily identify and benchmark key metrics before training is delivered, and make post-training results easier to evaluate.

- Establish metrics at the project or business unit level. While it may be tempting to demonstrate training’s value at the enterprise level, successful measurement programs typically start off as smaller-sized initiatives that focus on projects or business units. When working with smaller groups there are typically fewer obstacles to interfere in the measurement process as well. It’s about understanding which barriers can be affected by training and which are influenced by other process factors. By focusing on objectives that can be influenced by training and using other tools to address the non-training issues, the stakeholder will have a more realistic understanding of what can be improved with focused training.

Companies that incorporate even these simple guidelines into their assessment methodology should see a marked improvement in the success and relevance of their measurement initiatives and training overall.